One question that I'm always asked is, "What's the best PC setup for deep learning?" But I think we need to tackle an even more fundamental question first: Should you build your rig or just go cloud?

The Cloud vs DIY Workstation

First, let's be real - cloud computing is like Shopee Add-to-Cart. It's convenient, requires zero setup, and you don't have to deal with the mess of installing operating systems or debugging package dependencies. Trust me, I've been there and done that!

It's no wonder that many people are jumping on the cloud bandwagon. It's the same reason we happily pay for Netflix instead of dealing with sketchy torrent sites (remember those days? 😅).

But here's the thing - while cloud is tempting, I'm going to make a case for why building your own deep learning rig is the smarter move, especially if you'll likely train a lot of models.

Numbers Don't Lie

Let me break down a recent training scenario from our team. We'll compare our NVIDIA RTX 4070 Ti setup against a cloud-based V100 GPU. For simplicity of discussion, you can take these two GPUs to have a similar computing performance.

Here's what our typical workflow looks like:

- Task: Object Detection (We'll discuss more about this in future articles)

- Single training experimental run: 8 hours

- Frequency: 1 experiment per day (trust me, you'll want to run multiple experiments)

- 30-day usage: 240 GPU hours

Let's look at the cold, hard numbers:

| Cost Factor | Rig (RTX 4070 Ti) | Google Cloud (V100) |

|---|---|---|

| One-Time Fee | PHP 120,000 | PHP 0 |

| Monthly Fee | PHP 0 | PHP 35,116.80 |

| One Year Cost | PHP 120,000 | PHP 421,401.60 |

Here's the crazy part: Having your own rig pays for itself in just 3.5 months compared to cloud computing! 🤯

This is how expensive it is to use GPU in the cloud using popular service providers! And the main reason why I would always recommend just going with the headache of building your rig.

Selecting Your Next GPU

If you're now convinced to go build your own, we'll eventually lead to the next question: "Which GPU should I select?" If you have all the money in the world like Meta or Google, you simply the top of the line. But if you're like me who's thinking about, "Which one is sulit?" Then you've come to the right place.

You can think of GPU shopping like buying a car: you could splash out on a Ferrari (H100), but if you're mostly using it for your daily commute, you might be better off with Toyota.

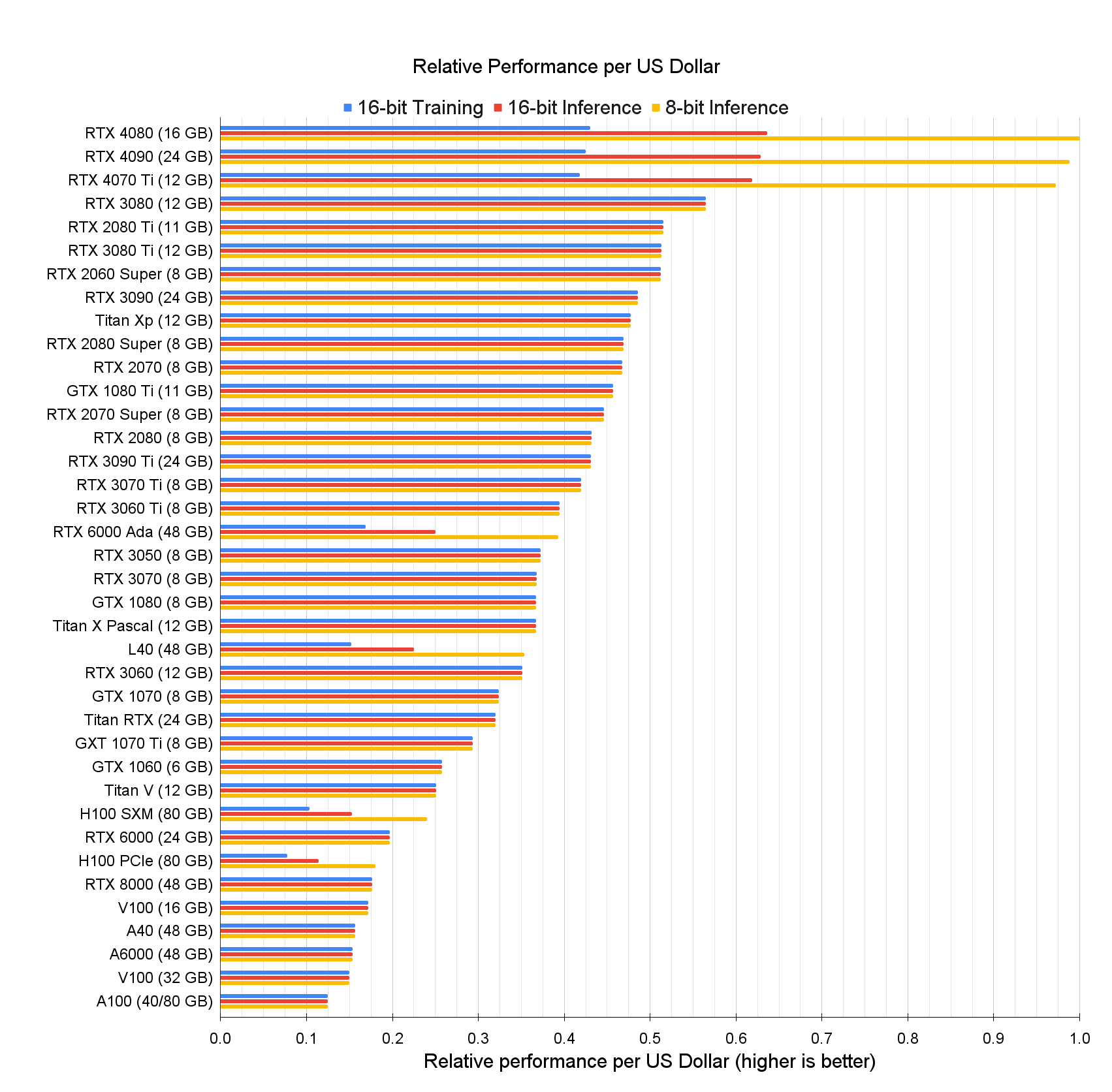

Fortunately, someone has already done the benchmark. I'm attaching his results of training time across different GPUs as shown below. The graph simply answers the question: “What is the cost-effective GPU to purchase?” In other words, which GPU provides the best bang-per-buck performance?

Here are some insights we can get here:

- The new generation provides better training time. Each new GPU generation from NVIDIA is like a new iPhone release - they keep getting better.

- But here's where it gets interesting. When you look at the performance scaling between the 4070 Ti, 4080, and 4090, something curious happens. The performance increase isn't linear with the price. In fact, the difference in training speed between a 4070 Ti and its more expensive siblings isn't as dramatic as you might expect.

- Compared to the 3000, RTX 4000 Series generation is about improving inference speed. Inference performance is good when you want to deploy AI system into production.

So here's the bottom line for cost-effective GPU:

RTX 3080: when you spend a lot of time just on training alone

RTX 4070 Ti : when you plan to use GPU for deployment

And...of course, if you're rich and can afford it, it's H100! 😎

CAVEAT: Now, before you think "Great, 4070 Ti it is!", the 4080 and 4090 do have one major advantage: they come with more VRAM (Virtual Memory). Think of VRAM like your workspace desk - the bigger it is, the more stuff you can spread out on it at once. In deep learning terms, this means you can work with larger models and bigger batch sizes during training.

But - and this is a big but - more VRAM doesn't automatically mean faster computation. It's like having a bigger desk doesn't necessarily make you work faster; it just means you can handle more materials at once. If you're working with standard models or can manage your batch sizes effectively, the 4070 Ti's memory won't be a bottleneck.

4070 Ti recommendation hits this sweet spot where you get:

- Excellent compute performance from the latest generation

- Enough VRAM for deep learning tasks

- A price point that won't have you eating instant noodles for the next year

Of course, if you're planning to work with the large language models and Generative AI, you'll need a larger VRAM like Quadro ADA6000. But you know what, for Generative AI, the ball game of cloud vs rig just becomes different. We'll create a separate article for that.

The Bottom Line

If you're serious about deep learning and plan to run lots of experiments in the coming years, build your own rig. The RTX 4070 Ti hits that sweet spot of performance and value for cost-sensitive researchers. It's like getting a Toyota Vios instead of a Ferrari. Sure, the Ferrari's faster, but do you need to pay 3x more for your grocery runs?

What's your take on this? Let me know your thoughts. Drop me back an email. I'd love to hear from you.

PS: If you found this helpful, consider subscribing to my newsletter where I share tips about AI, and deep learning.